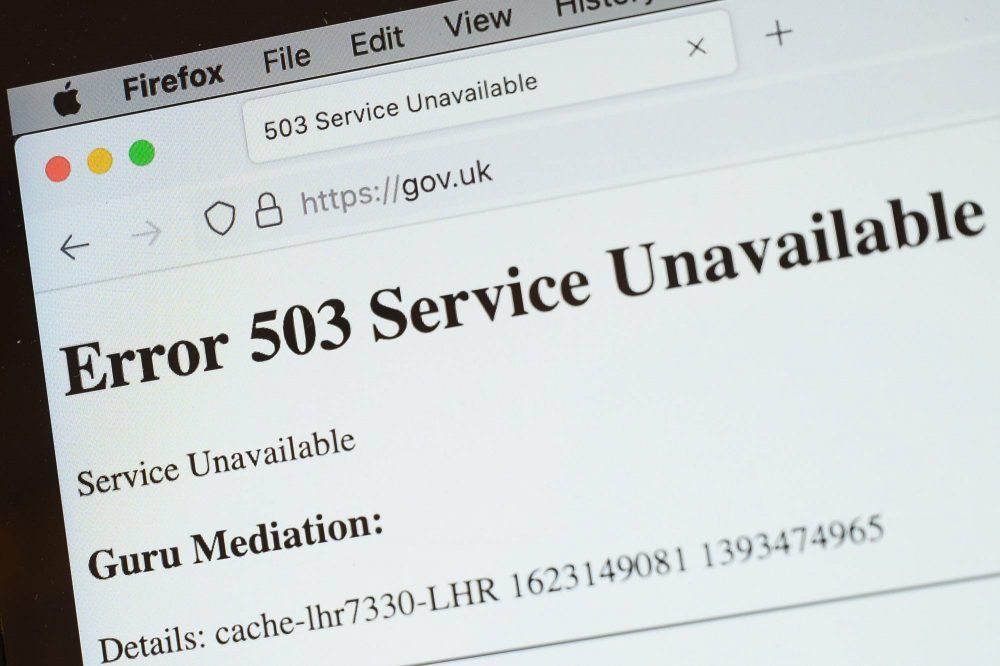

Here is another example of a very visible outage experienced by Fastly. In this case, we highlight how their use of the customer configuration change was instrumental to their ability to quickly identify, isolate and restore their service.

On June 8, Fastly, the CDN provider experienced an outage that caused disruption to 85% of their network service. The incident was triggered by a customer configuration change resulting from a bug introduced by an earlier software deployment on May 12th.

The good news is that the outage was immediately detected by the monitoring system within 1 min, however it still took 40 mins to identify the configuration change. Often with complex systems, designed with resiliency in mind, problems often do not immediately present themselves. Instead, they gradually build up over time and catch the operational support team, unaware. Troubleshooting these kinds of problems can be extremely difficult, especially if you lack visibility and awareness to configuration changes throughout your environment, and specifically the corresponding changes for completed change requests. Like most engineers, I can barely remember what I did yesterday, let alone a week ago.

The change and operational support processes can be greatly improved by implementing Configuration Change Monitoring, to provide the necessary visibility to all configuration changes across the infrastructure – networks, servers, services, applications, virtualization and cloud.

Configuration Change Monitoring is an invaluable tool to help identify and isolate the root cause of complex incidents by providing the details for all changes, whether they’re planned, unplanned or unauthorized.

Configuration Change Monitoring, used during the post-implementation review step, can also significantly reduce the number of incidents by catching errors early, before they become an outage. By automatically capturing the actual configuration changes, and making them available for peer-review, human and automation errors can be greatly reduced.

If you’re responsible for your company’s infrastructure operations and interested in improving your incident response,

visit SIFF.IO to find out “What the #%&$ changed?!”